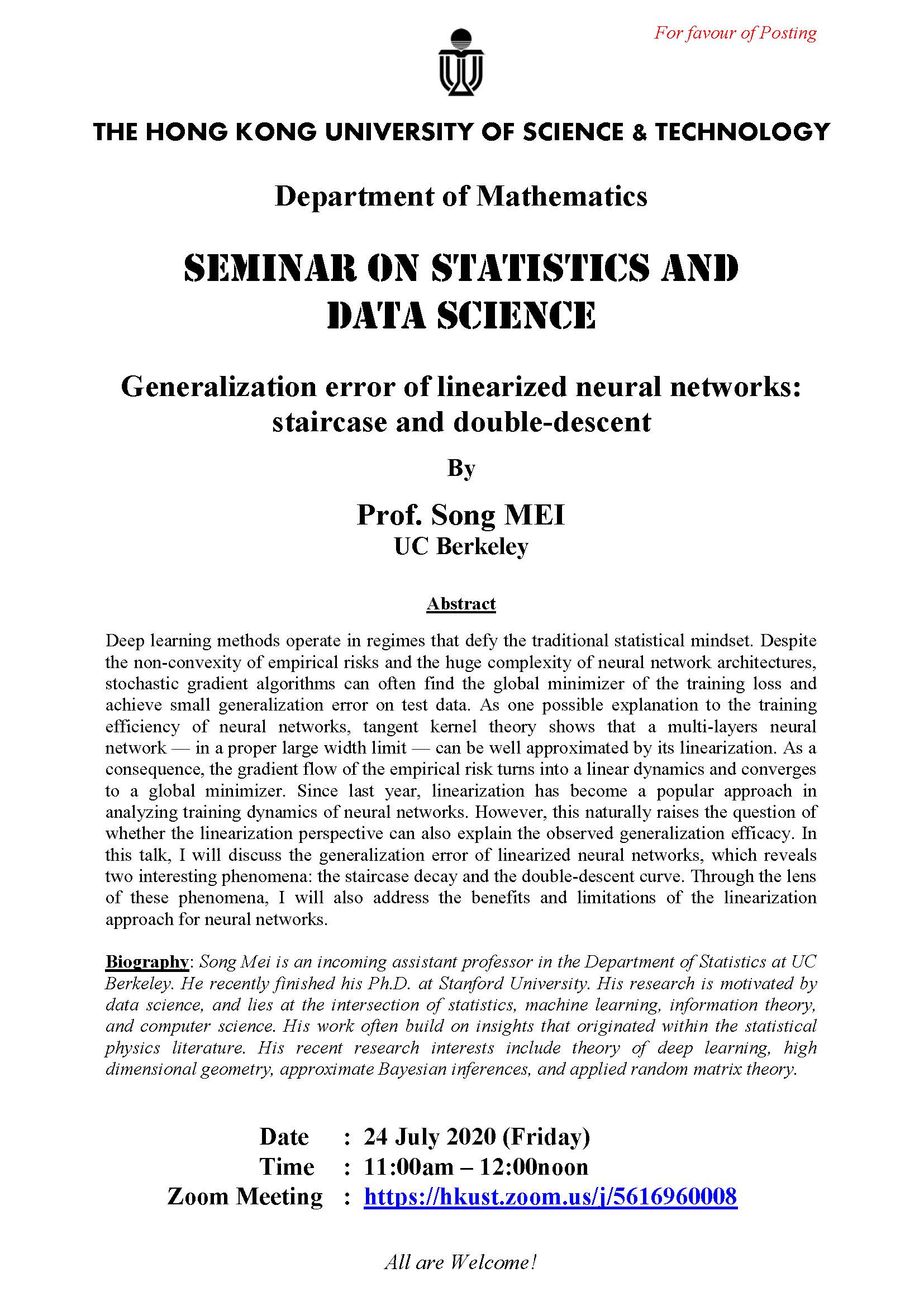

Deep learning methods operate in regimes that defy the traditional statistical mindset. Despite the non-convexity of empirical risks and the huge complexity of neural network architectures, stochastic gradient algorithms can often find the global minimizer of the training loss and achieve small generalization error on test data. As one possible explanation to the training efficiency of neural networks, tangent kernel theory shows that a multi-layers neural network — in a proper large width limit — can be well approximated by its linearization. As a consequence, the gradient flow of the empirical risk turns into a linear dynamics and converges to a global minimizer. Since last year, linearization has become a popular approach in analyzing training dynamics of neural networks. However, this naturally raises the question of whether the linearization perspective can also explain the observed generalization efficacy. In this talk, I will discuss the generalization error of linearized neural networks, which reveals two interesting phenomena: the staircase decay and the double-descent curve. Through the lens of these phenomena, I will also address the benefits and limitations of the linearization approach for neural networks.

24 Jul 2020

11am - 12pm

Where

https://hkust.zoom.us/j/5616960008

Speakers/Performers

Prof. Song MEI

UC Berkeley

UC Berkeley

Organizer(S)

Department of Mathematics

Contact/Enquiries

mathseminar@ust.hk

Payment Details

Audience

Alumni, Faculty and Staff, PG Students, UG Students

Language(s)

English

Other Events

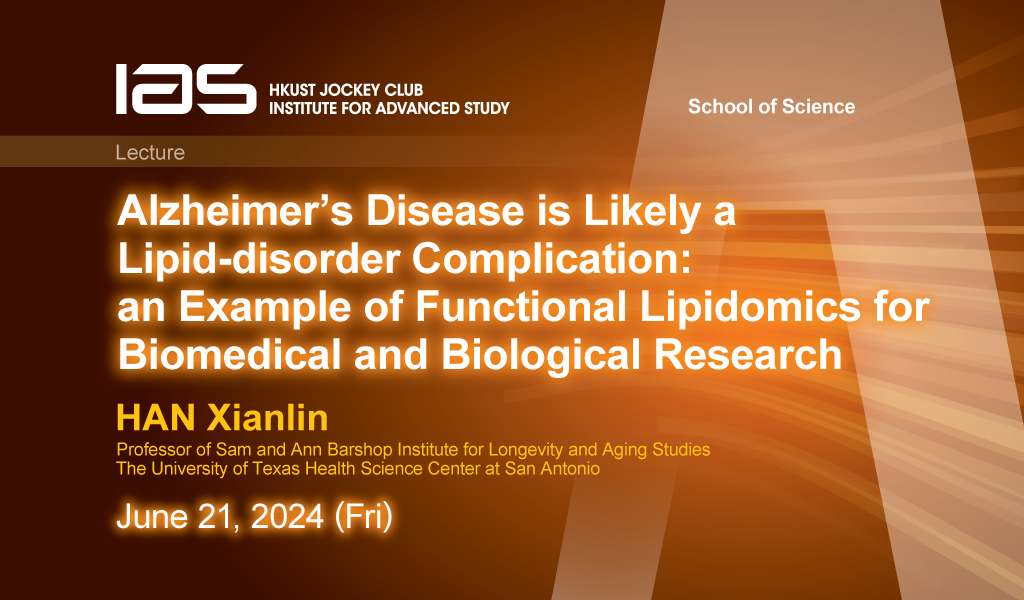

21 Jun 2024

Seminar, Lecture, Talk

IAS / School of Science Joint Lecture - Alzheimer’s Disease is Likely a Lipid-disorder Complication: an Example of Functional Lipidomics for Biomedical and Biological Research

Abstract

Functional lipidomics is a frontier in lipidomics research, which identifies changes of cellular lipidomes in disease by lipidomics, uncovers the molecular mechanism(s) leading to the chan...

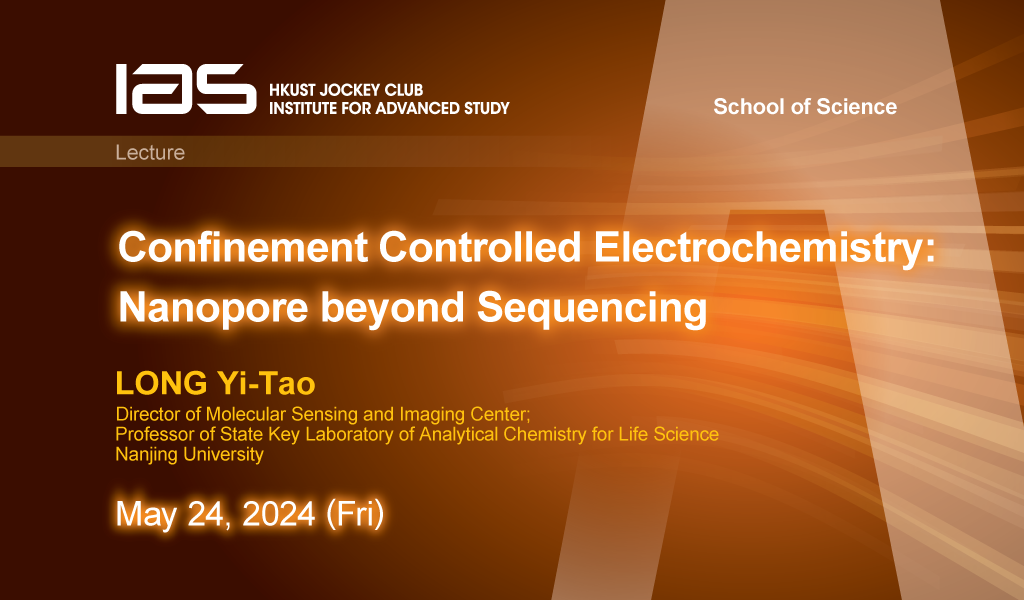

24 May 2024

Seminar, Lecture, Talk

IAS / School of Science Joint Lecture - Confinement Controlled Electrochemistry: Nanopore beyond Sequencing

Abstract

Nanopore electrochemistry refers to the promising measurement science based on elaborate pore structures, which offers a well-defined geometric confined space to adopt and characterize sin...