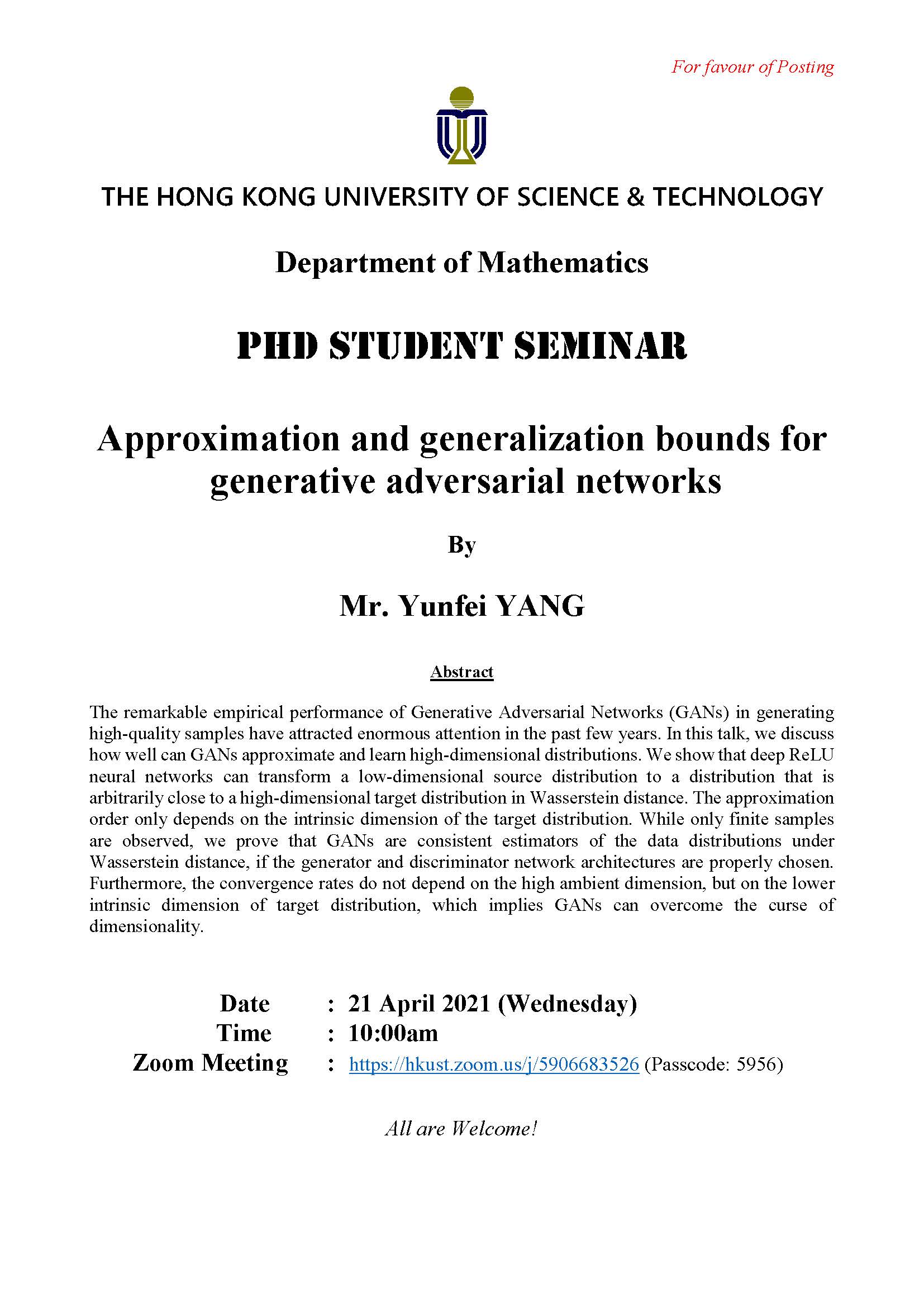

The remarkable empirical performance of Generative Adversarial Networks (GANs) in generating high-quality samples have attracted enormous attention in the past few years. In this talk, we discuss how well can GANs approximate and learn high-dimensional distributions. We show that deep ReLU neural networks can transform a low-dimensional source distribution to a distribution that is arbitrarily close to a high-dimensional target distribution in Wasserstein distance. The approximation order only depends on the intrinsic dimension of the target distribution. While only finite samples are observed, we prove that GANs are consistent estimators of the data distributions under Wasserstein distance, if the generator and discriminator network architectures are properly chosen. Furthermore, the convergence rates do not depend on the high ambient dimension, but on the lower intrinsic dimension of target distribution, which implies GANs can overcome the curse of dimensionality.